This is the last post in this blog. From now on I’ll be posting on my own server, in a much simpler static blog at http://nuclear.mutantstargoat.com/blog

Firefox drops ALSA; apulse to the rescue

December 11, 2017 — NuclearOnce again I’m caught between a rock and a stupid place. Mozilla joined Harry Pottering and the eternal september of GNU/Linux, who carries on dead-set on tearing down everything simple and elegant in the userland, replacing it with crude immitations of the beast (MacOS X). So in an inspired move, firefox went bananas and dropped its ALSA audio backend with release 57. And everyone who doesn’t fancy spending 10% of their battery life on a byzantine audio contraption, is left with silent southpark, and kung fury without the awesome 80s synths and the buttery voice of David Hasselhoff.

I tried switching to chromium for a month, but I just couldn’t stomach it. Now I always maintained that if everyone switches to pulse audio, I can always make a pulse wrapper over alsa, but I really didn’t want to have to deal with that. Thankfully, I didn’t have to bother, because a guy called Rinat Ibragimov beat me to it, and wrote apulse: a thin libpulse replacment which works with ALSA.

So in theory running apulse firefox should be sufficient to have sound in firefox 57 again. Unfortunately I had to take some more steps, and since I’m certain I’ll forget all about them next time I’m trying to do the same on another computer, I’ll keep a note here of the extra steps I had to take, to make apulse work with firefox on my computer.

Catching up: homebrew retro computer projects (Z80 and 68010)

December 4, 2017 — NuclearAnother catchup post, about my homebrew retro-computer projects. This started in 2016 when I made an extremely rudimentary, completely lacking in I/O capability, but extremely educational Z80 computer. I uploaded a video about it on youtube, while it was still on the breadboard stage, which demonstrated very clearly how the Z80 computer fetches and executes instructions by single-stepping the clock and inspecting the state of the data and address busses, as well as some important processor control signals at each step. Later I uploaded a short followup video to let it execute the program with a free-running clock. I also did a talk with the same demonstration at fosscomm 2016 with the final PCB version of this computer, but unfortunately there is no video of the event.

The next step was to make an improved homebrew computer, with proper I/O capabilities, which would be actually usable as a computer instead of just an educational demonstration. Initially I started designing an improved 8-bit computer based again on the Z80 processor, but soon I scrapped those plans and designed a 16-bit computer based on the Motorola 68000 processor instead.

The first video I uploaded about the 68k computer project was actually an attempt to familiarize myself with the processor by performing a similar single-stepping experiment again. This time I thought it would be fun to use a switches and LEDs panel similar to the old PDP or Altair interfaces to feed the processor with opcodes during the bus cycles. The video is somewhat long-winded, but I think if you skip the boring introduction, it’s also very educational, showing how to assemble a test program by hand, and how to coerce the 68k to single-step bus cycles while still keeping a continuously running clock, which is required for this processor to maintain its internal state.

I also uploaded a short followup progress report video shortly thereafter, with the computer constructed on a proper PCB, which shows the serial I/O interface and a bug in my initial design.

The computer works in this simple state, and I’m able to upload cross-compiled C programs through the serial port, and run them. It was a lot of fun reaching this stage, but then I got too lazy and didn’t even upload a proper demonstration video for that, or continue improving it for some time now.

To make it more interesting, I made a test program for my 68k computer which calculates a koch snowflake fractal, and sends graphics commands to the serial terminal to draw it. Here’s a screenshot of xterm, which supports the vt330/vt340 ReGIS graphics commands.

Catching up: SEGA Mega Drive (Genesis) PAL/NTSC mod

December 4, 2017 — NuclearContinuing with the 2017 catchup, another retro venture was acquiring a SEGA Mega Drive for the first time to hack, play some retro games, and watch mega drive demos. None of my software hacks are interesting enough to post at this point, but I did like a hardware mod I did, to add a switch to the back of the unit, that flips the megadrive between PAL (europe) and NTSC (US) modes. This was necessary, because some games and demos only work in one of the two modes, while some NTSC games work on PAL consoles like mine, but run way too slow.

If you want to see the details, head over to my regular website, where I uploaded a complete writeup of the PAL/NTSC hack.

Catching up: Amiga hardware hacks of 2017

December 4, 2017 — NuclearSo since I’m not really posting anything on this blog regularly. I decided to do a few catching up posts from time to time, with any cool stuff I did since last I posted here. First up, my amiga hardware hacks.

I bought an Amiga 500 in 2017, something that I meant to do for a long time now, to play with it, hack it, and see what makes it tick. So I ended up doing a couple of hardware hacks for it, to improve what I thought needed improving to make it more enjoyable to use and hack on.

First thing I did was make a “trapdoor” RAM expansion using 30pin SIMMs. 512k RAM expansions (to bring the total RAM to 1MB) was so ubiquitous back in the A500’s hayday that not having one would be crippling.

Next up, I wanted to set up a convenient hacking environment on the amiga itself, which is extremely annoying with my single floppy drive. Most people on the A500 used to use an external floppy for this purpose, but I decided to skip that and make an ATA hard disk interface for the amiga 500 instead.

Finally, it was really inconvenient having to move the whole amiga, with all the dangling cables behind it in front of my monitor to use it, which is necessary since the keyboard and the computer is one unit. Having to clear up space and manage the tangling mess of wires is not fun, so I decided to make a PS/2 keyboard controller for the A500, to allow me to connect an external standard PC keyboard, and leave the amiga tucked away on the corner of my desk out of my way.

Proper Oculus Rift DK2 setup on GNU/Linux

April 2, 2015 — NuclearThis is a quick post about the proper way to set up the Oculus Rift DK2 on GNU/Linux, which might not be obvious for users not deeply familiar with the X window system.

Introduction

(feel free to skip)

I remember discussing this a long time ago in the oculus forums, back when the linux version of the oculus SDK was doing absurdities such as relying on Xinerama to detect and use the oculus display. Setting up the rift display as an extension of the desktop, is wrought with peril and woe. Thankfully, the X window system is awesome, and used to deal with such diverse multi-display contraptions, back when burly men programmed computers made of pumps, grease, and steel… true story.

Initial thoughts on Vulkan

March 6, 2015 — NuclearI just finished watching the video of the Khronos GDC talk on Vulkan, and the corresponding slides, and I decided to share my initial thoughts on the new API, mostly in an attempt to consolidate them.

For those who missed the announcements of the last couple of days, Vulkan is supposed to be the successor to OpenGL, previously referred to as: glNext. The idea is to provide a low level API, basically a thin abstraction over the GPU hardware, along the lines of Apple Metal and AMD Mantle (as far as I’ve heard at least; I have no personal experience with either of the them).

I’ve been back and forth for a while, on whether I like or dislike this development. Until I realized that all my objections where centered around the idea that Vulkan is going to replace OpenGL. And by that, I mean that somehow everyone using OpenGL today, would switch over to Vulkan, something which is clearly not true. It is certainly talked about as a replacement for OpenGL, however in my opinion, it both is and isn’t. Let me explain…

OpenGL, from its inception, had a multiple personality disorder. This is clear just by reading the introductory chapter of the OpenGL specification document, which requires 4 consecutive sections, looking at OpenGL from different perspectives over the span of 3 pages, to attempt to define what OpenGL is:

- 1.1 What is the OpenGL Graphics System?

- 1.2 Programmer’s View of OpenGL

- 1.3 Implementor’s View of OpenGL

- 1.4 Our View

For me, and my daily hacking, OpenGL plays two distinct roles:

- A way to access and utilize the GPU hardware, to take advantage of its capability to accelerate the various graphics algorithms required for real-time rendering.

- A convenient 3D rendering library which is low level enough to not introduce obstacles in the form of cumbersome abstractions, while hacking graphics algorithms, yet high-level enough to make experimentation possible without necessarily having to write frameworks and abstractions for every task.

Based on that, it is now clear to me that Vulkan is indeed a very good replacement for the first use case, but completely unsuitable for the second. That’s why I think that Vulkan is not really a replacement of OpenGL. Vulkan and OpenGL can, and should coexist!

Vulkan looks like a perfect target for the reusable frameworks and engines. It provides some great opportunities for optimizations. I’m particularly excited about finally being able to multi-thread rendering code, without jumping through hoops (and performance bottlenecks) to serialize all API interactions through “the context thread”. I love the idea of having the API only deal with a concise intermediate representation of the shading language, which facilitates experimentation with new shading language frontends, off-line shader compilers, and avoids having to work around multiple vendors’ parser bugs and idiosyncrasies. I also like the idea of being able to manage the allocation and use of GPU resources, and the flow of data.

But, at the same time, I want the ease of use of OpenGL for my day to day hacks and experiments. I want immediate mode, matrix stacks, and the option of not having to care whether a texture or vertex buffer is currently backed by GPU memory or not.

I’ll go one step further, and this is really the gist of my thoughts on the matter: Not only should there be an OpenGL implementation over Vulkan, to use for experimental programs and one-off hacks, but ideally both should be seamlessly interoperable within the same program. Think of how powerful it would be to be able to start with an OpenGL prototype, and then go to the metal with Vulkan wherever you want to optimize! This way, to put it in 90s terms: Vulkan could be the assembly language of OpenGL.

Anyway, this pretty much sums up my initial thoughts on the Vulkan announcement. It’s still quite early and Vulkan itself is still in flux, so I guess we’ll have to wait and see how the whole thing turns out. But one thing is clear to me: Vulkan is very exciting, but OpenGL isn’t going to go away any time soon.

Tutorial: Practical makefiles, by example

February 9, 2015 — NuclearI know a lot of programmers, who are afraid of makefiles. Most of them come from a windows background, and when they migrate to GNU/Linux or MacOS X they naturally gravitate towards clunky IDEs to manage their build process. Others, fearing that makefiles are going to be too complex to manage manually, try to use even clunkier makefile (or project file) generators like cmake.

While none of the above solutions is without some merit in particular cases, I strongly believe that there’s no faster and simpler way to build your project, than writing a small makefile. And in order to dispel the fear, I decided to write a tutorial about how to write simple, practical makefiles, for your programs.

The article is too big and too … structured, for my idea of what a blog post should be, so I’m hosting it on my web site along with some of my previous articles instead.

So anyway here it is, let me know if you find it useful: Practical makefiles, by example.

Feel free to use the comments section in this post for any feedback, or send me an email if you prefer.

Btw, I used reStructuredText, and the docutils translators for this. So there’s also a PDF version, produced through the rst2latex translator. It’s not as good as it could have been if I wrote it directly in LaTeX, but I suppose it’s serviceable if you prefer a printable off-line version.

How to use standard output streams for logging in android apps

November 3, 2014 — NuclearAndroid applications are strange beasts. Even though under the hood it’s basically a UNIX system using Linux as its kernel, there are other layers between (native) android apps and the bare system, that some things are bound to fall through the cracks.

When developing android apps, one generally doesn’t login to the actual device to compile and execute the program; instead a cross-compiler is provided by google, along with a series of tools to package, install, and execute the app. The degrees of separation from the actual controlling terminal of the application, make it harder to just print debugging messages to the stdout and stderr streams and watch the output like one could do while hacking a regular program. For this reason, the android NDK provides a set of logging functions, which append the messages to a global log buffer. The log buffer can be viewed and followed remotely, from the development machine, over USB, by using the “adb logcat” tool.

This is all well and good, but if you’re porting a program which prints a lot of messages to stdout/stderr, or if you just like the convenience of using the standard printf/cout/etc functions, instead of funny looking stuff like __android_log_print(), you might wonder if there is a way to just use the standard I/O streams instead, right? Well so did I, and guess what… this is still UNIX so the answer is of course you can!

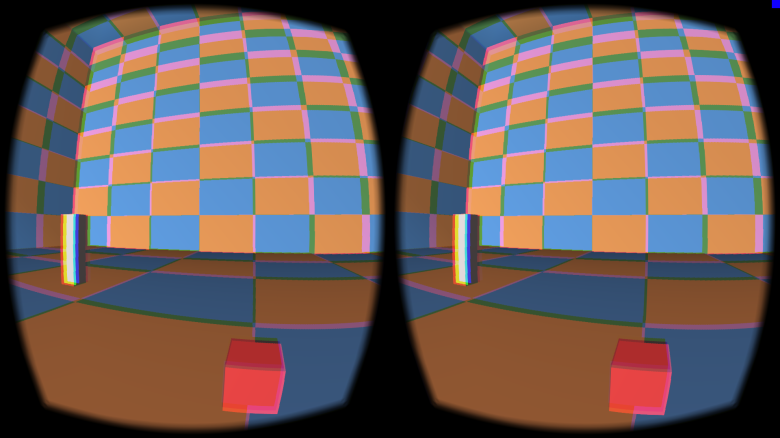

OculusVR SDK and simple oculus rift DK2 / OpenGL test program

September 7, 2014 — NuclearEdit: I have since ported this test program to use LibOVR 0.4.4, and works fine on both GNU/Linux and Windows.

Edit2: Updated the code to work with LibOVR 0.5.0.1, but unfortunately they removed the handy function which I was using to disable the obnoxious “health and safety warning”. See the oculus developer guide on how to disable it system-wide instead.

I’ve been hacking with my new Oculus Rift DK2 on and off for the past couple of weeks now. I won’t go into how awesome it is, or how VR is going to change the world, and save the universe or whatever; everybody who cares, knows everything about it by now. I’ll just share my experiences so far in programming the damn thing, and post a very simple OpenGL test program I wrote last week to try it out, that might serve as a baseline.