Another catchup post, about my homebrew retro-computer projects. This started in 2016 when I made an extremely rudimentary, completely lacking in I/O capability, but extremely educational Z80 computer. I uploaded a video about it on youtube, while it was still on the breadboard stage, which demonstrated very clearly how the Z80 computer fetches and executes instructions by single-stepping the clock and inspecting the state of the data and address busses, as well as some important processor control signals at each step. Later I uploaded a short followup video to let it execute the program with a free-running clock. I also did a talk with the same demonstration at fosscomm 2016 with the final PCB version of this computer, but unfortunately there is no video of the event.

The next step was to make an improved homebrew computer, with proper I/O capabilities, which would be actually usable as a computer instead of just an educational demonstration. Initially I started designing an improved 8-bit computer based again on the Z80 processor, but soon I scrapped those plans and designed a 16-bit computer based on the Motorola 68000 processor instead.

The first video I uploaded about the 68k computer project was actually an attempt to familiarize myself with the processor by performing a similar single-stepping experiment again. This time I thought it would be fun to use a switches and LEDs panel similar to the old PDP or Altair interfaces to feed the processor with opcodes during the bus cycles. The video is somewhat long-winded, but I think if you skip the boring introduction, it’s also very educational, showing how to assemble a test program by hand, and how to coerce the 68k to single-step bus cycles while still keeping a continuously running clock, which is required for this processor to maintain its internal state.

I also uploaded a short followup progress report video shortly thereafter, with the computer constructed on a proper PCB, which shows the serial I/O interface and a bug in my initial design.

The computer works in this simple state, and I’m able to upload cross-compiled C programs through the serial port, and run them. It was a lot of fun reaching this stage, but then I got too lazy and didn’t even upload a proper demonstration video for that, or continue improving it for some time now.

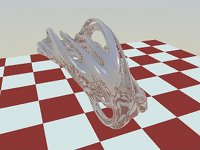

To make it more interesting, I made a test program for my 68k computer which calculates a koch snowflake fractal, and sends graphics commands to the serial terminal to draw it. Here’s a screenshot of xterm, which supports the vt330/vt340 ReGIS graphics commands.